ARTIFICIAL INTELLIGENCE (AI)

INFORMATION SHARING & ANALYSIS CENTER (AI-ISAC)

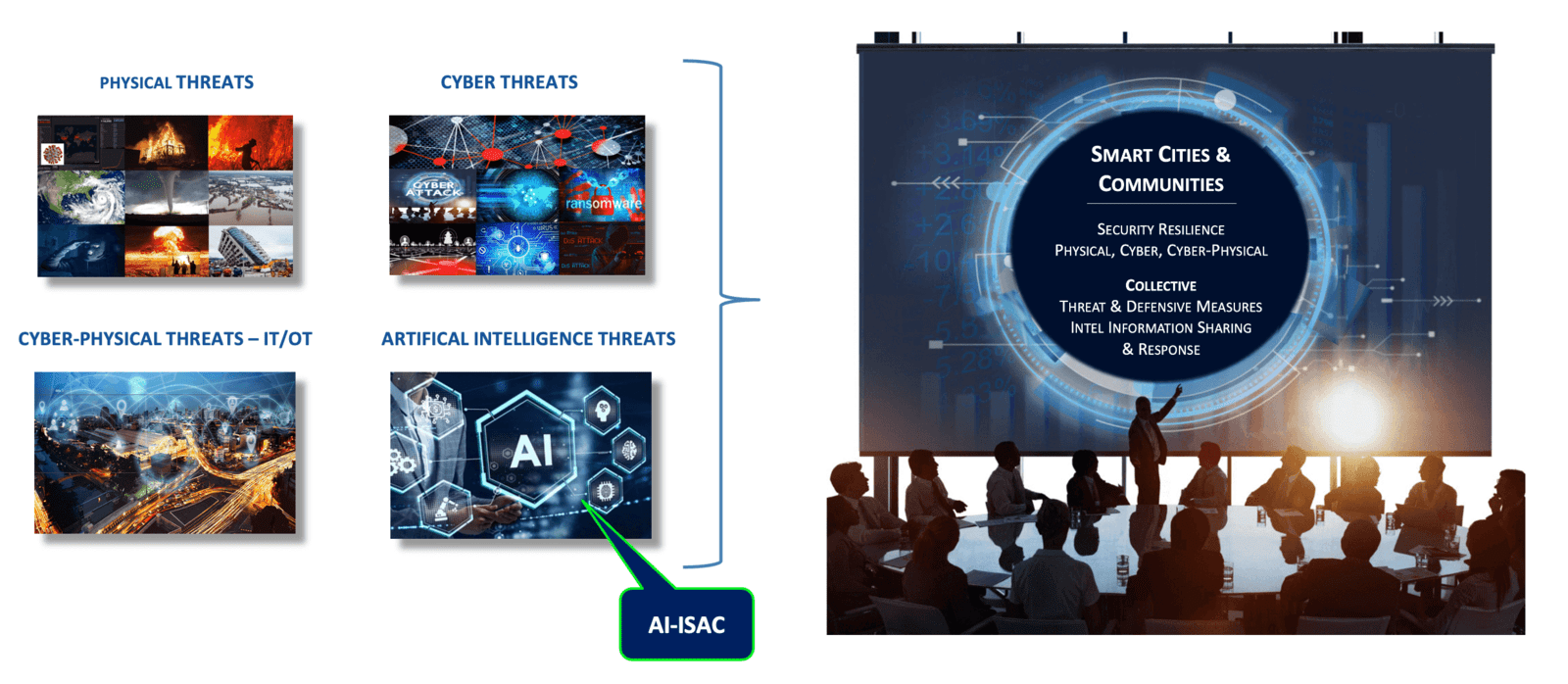

MONITORING OF THE GLOBAL ARTIFICIAL INTELLIGENCE (AI) THREAT LANDSCAPE AI Security Threat & Defensive Measures Information Sharing, Analysis & Coordinated Response IACINet Intel Information Sharing Infrastructure, Tools & Technologies, IACI IT/OT Security Lab, R&D/Testing, Best Practice Adoption, Education, Exercise Collaborative Partners Government (Federal, State Local, Tribal, Territorial), International | Law Enforcement U.S. Department of Homeland Security (DHS) | National Institute of Standards & Technology (NIST) Public-Private Critical Infrastructure Owners & Operators, ISAOs / ISACs, Associations, Academia Trusted Security & Technology Partners |

AI-ISAC Division, International Association of Certified ISAOs (IACI) | IACI-CERT, Center for Space Education, NASA/Kennedy Space Center, FL |

Municipalities - Cities, Towns, Villages, Counties, Rural Regions | Public Safety - 911, EMS, Fire, Law Enforcement Critical Infrastructure - Chemical, Commercial Facilities, Communications, Critical Manufacturing, Dams Defense, Elections Energy, Financial Services, Food & Agriculture, Government Facilities, Healthcare, Information Technology, Transportation, Water & Wastewater Public Citizens |

October 2023

U.S. PRESIDENTIAL EXECUTIVE OFDER -

SAFE, SECURE, AND TRUSTWORTHY DEVELOPMENT AND USE OF ARTIFICIAL INTELLIGENCE

- New Standards, Tools and Tests for AI Safety & Security

- All Developers Share Safety Test Results and Critical Information with the U.S. Government

- Protect Against Risks of Using AI to Engineer Dangerous Biological Materials

- Protect Americans from AI-Enabled Fraud and Deception

- Establish an Advanced Cybersecurity Program to Develop AI Tools - Find/Fix Software Vulnerabilities

- Order Development of National Security Memorandum - Directs Further Action on Ai and Security

- Protect American's Privacy, Advance Equity and Civil Rights

- Standing Up for Consumers, Patients and Students, Supporting Workers

- Promoting Innovation and Competition

- Advancing American Leadership Abroad

- Ensuring Responsible and Effective Government Use of AI

November 2023

THE BLETCHLEY DECLARATION

Signed by 29 Counties Attending the AI Safety Summit Held by the UK Government

Global Nations Have Jointed the Bletchley Declaration on the Security of AI. The Historic Event Took Place at the World's Largest AI Safety Summit, Held in the UK, the Summit was Attended by Representatives from Global Governments.

The Bletchley Declaration Serves as a Comprehensive Document Outlining a Program to Identify Risks Associated with Advancement of AI Technologies, It's Aim is to Foster a

Scientific Understanding of the Risks and Collaborate to Develop International Mitigation Policies.

Countries Signing the Bletchley Declaration

Australia, Brazil, Canada, Chile, China, European Union, France, Germany, India, Indonesia, Ireland, Israel, Japan, Kanya,

Kingdom of Saudi Arabia, Netherlands, Nigeria, The Philippines, Republic of Korea, Rwanda, Singapore, Spain, Switzerland, Turkiye,

Ukraine, United Arab Emirates, United Kingdom of Great Britain and Northern Ireland, Unites States of America

October 2022

U.S. FEDERAL GOVERNMENT - BLUEPRINT FOR AN AI BILL OR RIGHTS

Making Automated Systems Work for The American People

The Blueprint for an AI Bill of Rights is a Set of Five Principles and Associated Practices to Help Guide the Design, Use, and Deployment of Automated Systems to Protect the Rights of the American Public in the Age of Artificial Intelligence.

These Principles are a Blueprint for Building and Deploying Automated Systems that are Aligned with Democratic Values and Protection of Civil Rights, Civil Liberties and Privacy.

1. Safe and Effective Systems - Protection from Unsafe or Ineffective Systems

2. Algorithmic Discrimination Protections - No Algorithms Discrimination, Systems Equitable Use & Design

3. Data Privacy Protections - From Abusive Data Practices via Built-In Protection and How Your Data is Used

3. Notice and Explanations - Than an Automated System is Being Used and How It Impacts You

5. Human Alternatives, Consideration and Fallback - Ability to Opt Out, Access to Resources for Problems

October 2023

LEADING AI COMPANIES - PUBLIC COMMITMENT TO THE U.S. EXECUTIVE ORDER

AI SAFETY, SECURITY & TRUST

The Following Companies Have Voluntarily Committed to the Executive Order's

Three Principles (Safety, Security, Trust) Fundamental to the Future of AI:

Adobe | Amazon | Anthropic | Cohere | Google | IBM | Inflection | Meta

Microsoft | Nvidia | OpenAI | Palantir | Salesforce | Scale AI | Stability

Earning the Public's Trust

|

November 2023

U.S. DHS - CISA ROADMAP FOR ARTIFICIAL INTELLIENCE (2023 - 2024)

CISA's Role in Securing AI

CISA's Strategic Plan 2023-2025 Underpins CISA's Adaption to These Technologies and Each of CISA's Four Strategic Goals - Relevant to and Impacted by AI:

Goal 1 - Cyber Defense

Goal 2 - Risk Reduction and Resilience

Goal 3 - Operational Collaboration

Goal 4 - Agency Utilization

CISA's Roadmap for Artificial Intelligence

Unify and Accelerate CISA's AI Effort Along 5 Lines of Effort (LOE)

Line of Effort 1 - Responsibly Use AI to Support Our Mission

Line of Effort 2 - Assure AI Systems

Line of Effort 3 - Protect Critical Infrastructure from Malicious Use of AI

Line of Effort 4 - Collaborate/Communicate on Key AI Efforts - Interagency, International the Public

Line of Effort 5 - Expand AI Expertise in Our Workforce

2023

U.S. DEPT. OF COMMERCE | NATIONAL INSTITUTE OF STANDARDS & TECHNOLOGY (NIST)

ARTIFICIAL INTELLIGENCE RISK MANAGEMENT FRAMEWORK (AI RMF 1.0)

NIST AI RMF

The AI RMF Refers to an AI System as an Engineering or Machine-Based System that Can, for a Given Set of Objectives, Generate Outputs such as Predictions, Recommendations, or Decisions Influencing Real or Virtual Environments.

Goal -To Offer a Resource to the Organizations Designing, Developing, Deploying, or Using AI Systems to Help Manage

the Many Risks of AI and Promote Trustworthy and Responsible Development and Use of AI Systems.

Voluntary - Rights-Preserving, Non-Sector-Specific and Use-Case Agnostic - Providing Flexibility to Organizations of All Sizes and in All Sectors and Throughout Society to Implement Framework Approaches,

Practical - To Adapt to the AI Landscape as AI Technologies Continue to Develop, and to Be Operationalized by Organizations in Varying Degrees and Capacities so Society Can Benefit from AI While Also Being Protected from Its Potential Harm.

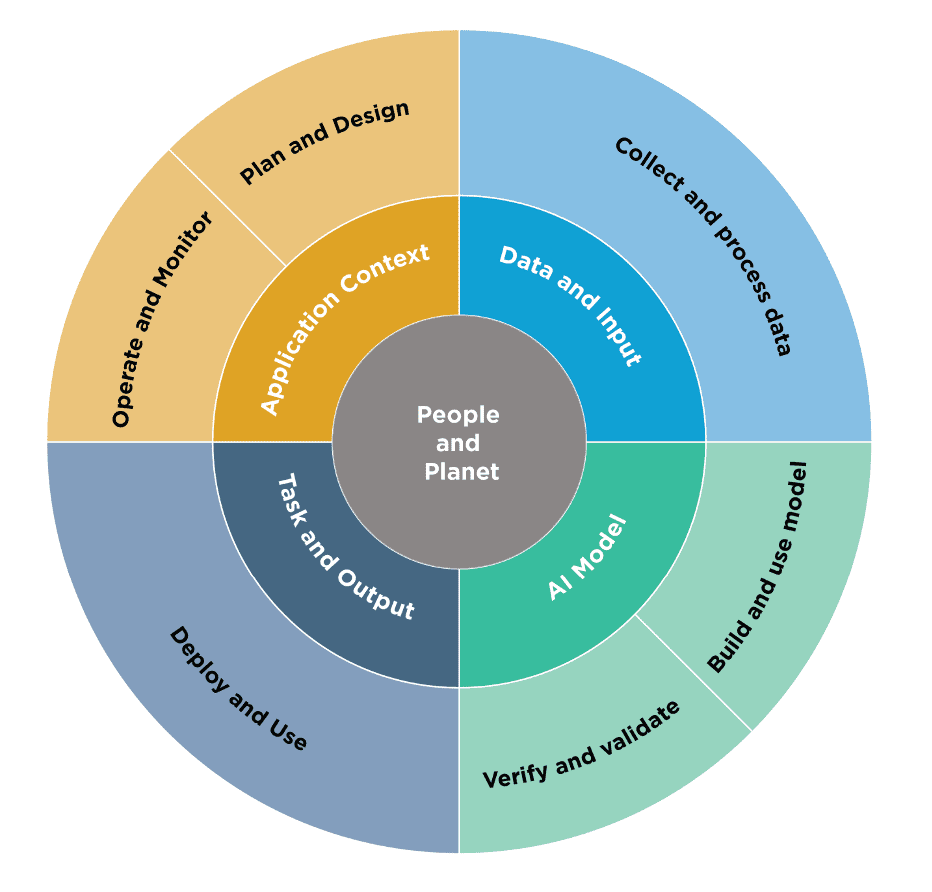

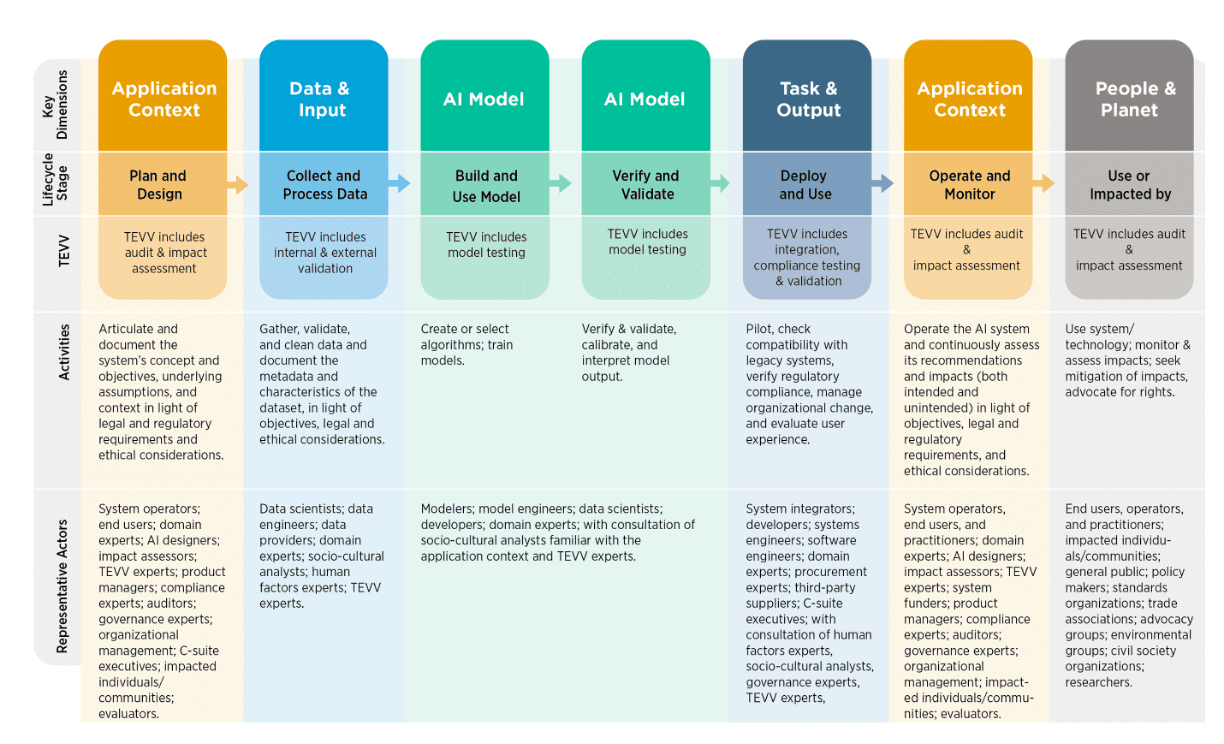

TWO INNER CIRCLES - AI SYSTEMS' KEY DIMENSIONS OUTER CIRCLE - AI LIFECYCLE STAGES AI DIMENSIONS - APPLICATION, CONTEXT, DATA & INPUT, AI MODEL, TASK & OUTPUT Primary NIST AI RMF Audience - AI Actors Involved in These Dimensions Who Perform or Manage: The Design, Development, Deployment, Evaluation, Use of AI Systems, and Who Drive AI Risk Management Efforts Risk Management - Starts with the Plan & Design Function in the Application Context - Performed Throughout the AI System Lifecycle |

AI ACTORS - ACROSS THE AI LIFECYCLE Successful Risk Management - Depends Upon Collective Responsibility Among AI Actors AI RMF Functions - Require Diverse Teams, Perspectives, Disciplines, Professions and Experiences |

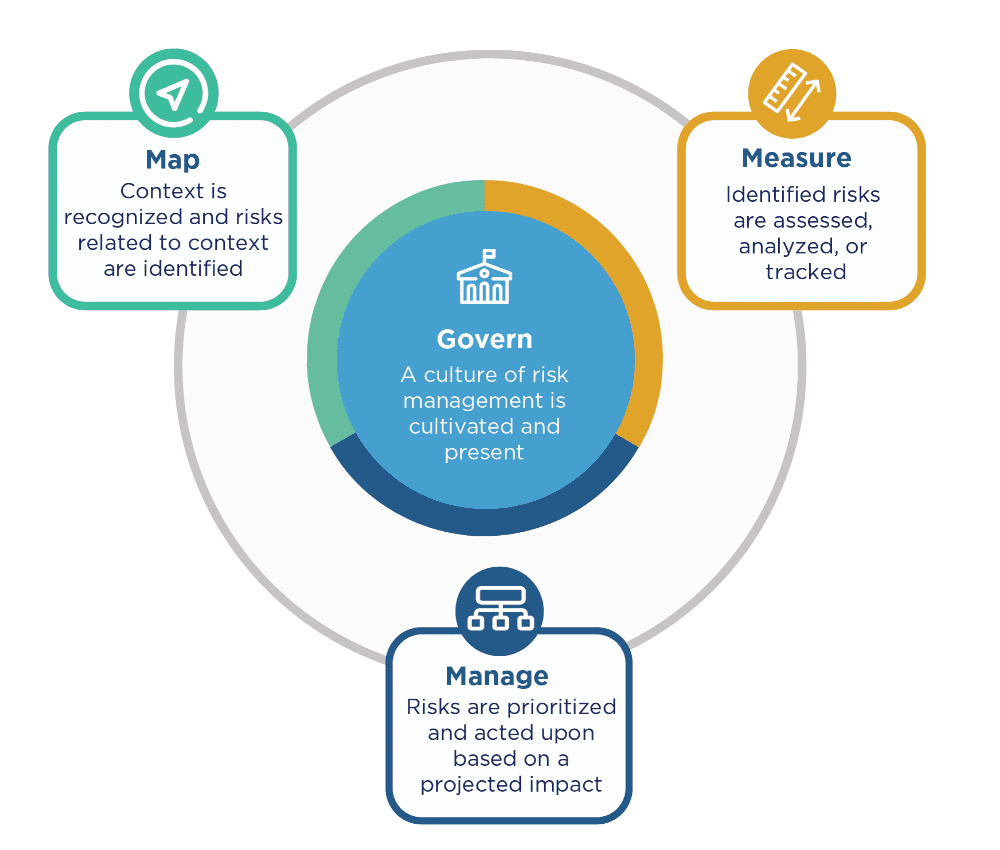

NIST AI RMF CORE FUNCTIONS - GOVERN, MAP, MEASURE, MANAGE Each of the High-Level Functions is Broken Down into Categories and Sub-Categories. Categories and Sub-Categories are Divided into Specific Actions and Outcomes, Functions Organize AI Risk Management Activities at their Highest Level to Govern, Map, Measure and Manage AI Risks Governance is Designed to be a Cross-Cutting Function to Inform and Be Infused Throughout the Other Three Functions. |

U.S. NATIONAL ARTIFICIAL INTELLIGENCE

RESEARCH AND DEVELOPMENT STRATEGIC PLAN - 2023 UPDATE

The Plan Defines Major AI Research Challenges to Coordinate and Focus Federal R&D Investments.

It Ensures Continued U.S. Leadership in the Development and Use of Trustworthy AI Systems, Prepare the Current and Future U.S. Workforce for the Integration of AI Systems Across All Sectors, and Coordination of AI Activities Across All Federal Agencies.

Strategy 1 -Make Long-Term Investments in Fundamental and Responsible AI Research

Strategy 2 - Develop Effective Methods for Human-AI Collaboration

Strategy 3 - Understand and Address the Ethical, Legal and Societal Implications of AI

Strategy 4 - Ensure the Safety and Security of AI Systems

Strategy 5 - Develop Shared Datasets and Environments for AI Training and Testing

Strategy 6 - Measure ad Evaluate AI Systems through Standards and Benchmarks

Strategy 7 - Better Understand the National AI R&D Workforce Needs

Strategy 8 - Expand Public-Private Partnerships to Accelerate Advances in AD

Strategy 9 - Establish a Principled and Coordinated Approach to International Collaboration in AI Research